I’ve found a better home for my Business Intelligence blog.

Friday, April 9, 2010

SSRS – Why Do We Need InScope Function in Matrix Subtotals

Here is a pretty good article about advanced matrix reporting technique. I’ll show you an example where I need to manipulate the Subtotals in a matrix to get a subtotal that is not the default sum of all the data in the group. (I need to check out if this is still true in SSRS 2008.)

By default, the subtotal in the matrix is the sum of all numbers in the defined group. But in many cases, we need to have custom aggregates on a matrix report. Examples are, you need average instead of sum, you need growth percentage instead of sum. In this example, I need to sum only the numbers in bold, and ignore the numbers that are not in bold.

matrix1_RowGroup2: this is the lowest level grouping Dates on the column.

matrix1_ColumnGroup2: this is the lowest level grouping on the row.

To get a custom aggregates, enter this in the Expression editor of the measure cell. (To be continued…)

=iif(inscope("matrix1_ColumnGroup2") and inscope("matrix1_RowGroup2"), IIF(SUM(Fields!ACCT_COUNTER.Value) = 0, "", SUM(Fields!ACCT_COUNTER.Value)), IIF(SUM(Fields!ACCT_COUNTER_SUBTOTAL.Value) = 0, "", SUM(Fields!ACCT_COUNTER_SUBTOTAL.Value)))

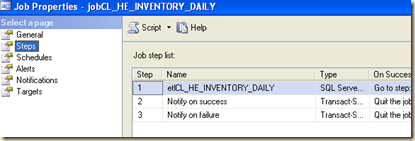

SSIS – Automate a Package in Development

In development, I have no ways to automate a SSIS package via AutoSys.

Here are some simple steps I take to automate execution of a SSIS package.

1) Import the package into SQL Integration Services.

2) Create a job to execute the package.

3) In the Data sources tab, check the appropriate data source(s) that need explicit login credentials, and enter appropriate user id and password (batch login IDs are the best).

Thursday, April 8, 2010

SQL – Index Clustered vs Non-Clustered

Indexes in SQL Server are similar to the indexes in books. They help SQL Server retrieve the data quicker.

In SQL server, indexes can be clustered indexes and non-clustered indexes.

When you create a clustered index on a table, all the rows in the

table are “physically” stored in the order of the clustered index key. So, there can be only one clustered index per table.

Non-clustered indexes have their own storage separate from the table data storage. Data are only “logically” stored in the order (with a row locator).

Appropriate indexes improve the query performance, as the query optimizer can choose from all the existing indexes to come up with an efficient execution plan.

However, too many indexes can slow down other data modification operations (such as INSERT, UPDATE, DELETE). This is because every time data changes in the table, all the indexes need to be updated. Another disadvantage of having too many or unnecessary indexes is that, indexes need disk space, the more indexes you have, more disk space is used.

SQL – Denormalization & Normalization

In the reporting business, we would reverse some of the normalization process.

It's the controlled introduction of redundancy in to

the database design. It helps improve the query performance as the number of joins could be reduced.

Normalization is the process of efficiently organizing data in a database. There are two goals of the normalization process: eliminating redundant data and ensuring data dependencies make sense (only storing related data in a table).

First Normal Form (1NF) – primary key

First normal form (1NF) sets the very basic rules for an organized database:- Eliminate duplicative columns from the same table.

- Create separate tables for each group of related data and identify each row with a unique column or set of columns (the primary key).

Second Normal Form (2NF) – foreign key

Second normal form (2NF) further addresses the concept of removing duplicative data:- Meet all the requirements of the first normal form.

- Remove subsets of data that apply to multiple rows of a table and place them in separate tables.

- Create relationships between these new tables and their predecessors through the use of foreign keys.

Third Normal Form (3NF) – all columns depend on the PK

Third normal form (3NF) goes one large step further:- Meet all the requirements of the second normal form.

- Remove columns that are not dependent upon the primary key.

Fourth Normal Form (4NF)

Finally, fourth normal form (4NF) has one additional requirement:- Meet all the requirements of the third normal form.

- A relation is in 4NF if it has no multi-valued dependencies.

SQL – Confused by keys?

Define candidate key, alternate key, composite key.

A candidate key is one that can identify each row of a table uniquely.

Generally a candidate key becomes the primary key of the table. If the table has more than one candidate key, one of them will become the primary key, and the rest are called alternate keys.

A key formed by combining at least two or more columns is called

composite key.

In the end, it’s all about the uniqueness of each row in the table.

SSAS - AdventureWorksDW sample data warehouse for data mining and OLAP

The Adventure Works DW sample data warehouse is used to demonstrate the business intelligence features that are available in SQL Server. The data in the data warehouse has been trended to support both data mining and Online Analytical Processing (OLAP) scenarios.

The follow is from Microsoft site AdventureWorks Sample Data Warehouse: http://msdn.microsoft.com/en-us/library/ms124623.aspx

The following sections provide more information about the data warehouse itself and about the scenarios that it supports:

The Data Warehouse

Adventure Works DW contains a subset of the tables from the OLTP database, in addition to financial information that is pulled from a separate data source. The data is kept in synch with the OLTP database to support typical Integration Services scenarios such as loading and updating the data warehouse. The Readme_AWDataWarehouseRefresh Package Sample demonstrates how to use an Integration Services package to load data into Adventure Works DW from the Adventure Works OLTP.

Adventure Works DW contains two subject areas, finance and sales, which are described in the following sections. Additionally, the data warehouse contains views that are used to support the data mining scenarios that are described later in this topic.

SSAS – AdventureWorks – A Sample OLTP Database

AdventureWorks is a sample OLTP Database. Objects such as tables, views, and procedures are contained in schemas.

SSAS - AdventureWorksDW

On SQL database engine, here is how this database is defined.

Take a look at one of the Fact table FactInternetSales to see how we define the keys on the Fact table. There are 8 foreign keys are defined, and one alternate key (unique key) defined.

Now take a look at one dimension table DimProduct.

DimCustomer.

SSAS - Analysis Services Tutorial.cube

I got the Analysis Services Tutorial installed. It has one cube with 1 measure, and 5 dimensions.

Here is the Data Source View (Adventure Works DW.dsv) showing one Fact table FactInternetSales, and 4 dimensions, DimProduct, DimTime, DimCustomer, and DimGeography.

The Data Source (Adventure Works DW.ds) is defined here.

with this connection the database AdventureWorksDW on SQL server.

SQL – Remove Time from Datetime Field

I’ve see developers use custom function to remove time from datetime field. It’s obvious that re-usability is preferred by those developers over performance. When we are dealing with millions of records, the custom function will slow down the query dramatically. Instead, I always prefer performance. I use the following code directly in my query.

CONVERT(DATETIME, CONVERT(CHAR(10), B.DT_TM_STAMP, 101)) AS DT

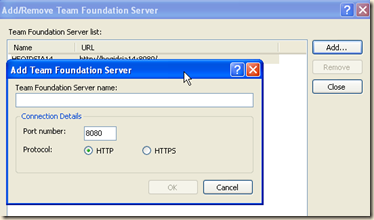

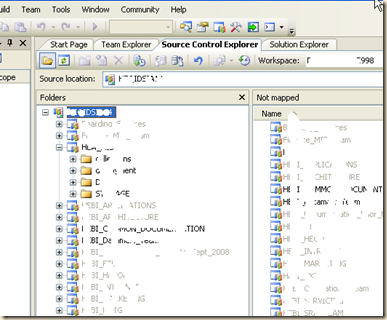

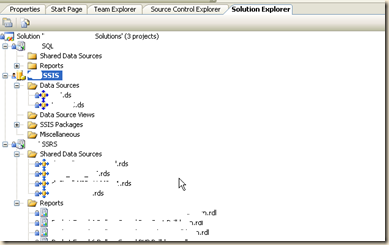

Change Management – TFS: The Solution Structure

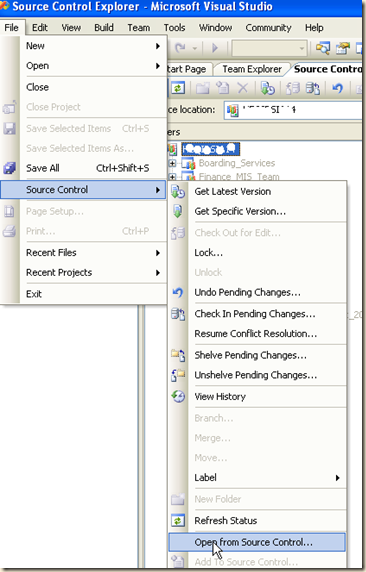

Here is a typical TFS (Team Foundation Server) solution structure in Visual Studio.

First you need to locate the TFS server.

In this example, a TFS server, and some team projects have been set up.

If this is the first time, I’d need to locate the TFS server, by clicking on the Servers.. button above.

Once the TFS server is located, and the team projects are selected, you will get the Source Control Explorer tab where you will see all the projects that you have access to.

The folder structure on the left is showing folders for different teams. The .SLN file on the right will have 4 projects in it, i.e. the dos project, the SQL project, the SSIS project, and the SSRS project.

Here are the 3 projects in the VS solution, the SQL, SSIS and SSRS projects.

Excel PivotTable – From a Stored Procedure

What if we want to source the data from a stored procedure, not directly from a table.

After the OLE DB connection is created, and when Excel is ready to import data, click the Properties button.

In this Connection Properties window, change the Command Type from Table to Default, and enter the command to execute the stored procedure.

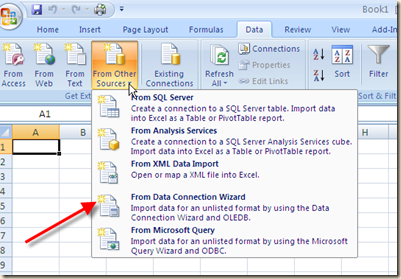

Use OLE DB Query & PivotTable in Excel

Excel can retrieve data from external data sources via ODBC connection or OLE DB connection.

We can use OLE DB query to get cubes in SSAS, or access stored procedures in SQL server.

Give it the SQL server name.

Let’s pick the PivotTable Report type.

We get this PivotTable, and fields are ready to be dropped into the right place.

If we do not need to sub-total, then select option None in the Field Settings for each Row Labels.

If we don’t need grand totals, uncheck these two options in teh PivotTable Options.

AutoSys Job Scheduler

Here is a nice article about AutoSys job scheduler.

AutoSys is used for defining, scheduling and monitoring jobs. These jobs can be a UNIX script, java program or any other program which can be invoked from shell.

As a BI developer, it’s important to understand how AutoSys works at high level. To utilize AutoSys job scheduler, I would specify all the dependencies my process has. These will include any objects on SQL server and also on the host DBMS. Generally speaking, jobs that are scheduled through AutoSys will work more efficiently if the jobs are standalone jobs.

Change Management – Release Instruction

Here is a typical release instruction I would create for the release coordinator to release new changes into production.

Change Management - Visual Source Safe vs. Team Foundation

There are similarities between them, but also fundamental differences. Here they are.

Visual SourceSafe and Team Foundation source control enable you to accomplish the same basic tasks: develop more than one version of a product at the same time, change a released version of a product without affecting other versions, quickly retrieve a batch of related files, determine who made a change and when, compare revisions of a file, and move changes from one version into another.

But despite many similarities, Team Foundation and Visual SourceSafe differ in fundamental ways.

Architectural Differences

SourceSafe Explorer and the plug-in for Visual Studio read from and write to a Visual SourceSafe database, which is a collection of files that are usually stored in a shared network folder.

Team Foundation is a client-server source control system that uses a .NET Web service to access items stored in a SQL Server database. Team Foundation source control's architecture, offers increased performance and reliability.

Functional Differences

Changesets

Conceptually, the internal structure of a Visual SourceSafe database and that of a Team Foundation source control server is similar. Both databases and servers are organized hierarchically. Folders contain files. Files consist of versions that are identified by number and date/time of creation.

Team Foundation advances a concept that does not exist in Visual SourceSafe: changeset. A changeset is a logical container in which Team Foundation stores everything related to a single check-in operation: file and folder revisions, links to related work items, check-in notes, a check-in comment, and other information such as who submitted the change. For more information, see Working with Source Control Changesets.

SQL – UPDATE Statement with JOIN

UPDATE A

SET A.PAY_ORDER = B.PAY_ORDER

FROM tbl_A A

JOIN tbl_B B

ON A.ACCT_NUMBER = B.ACCT_NUMBER

AND A.PAY_DATE = B.PAY_DATE

SQL – Openquery (3) Delete & Insert

If you have a small (less than 10K. larger dataset will kill your server) set of records on SQL server that you want to pass to the linked server, there is a way to use a staging table.

The DELETE statement will delete all records on the staging table. The INSERT statement will pass your dataset to the staging table.

Then you can use the staging table on the linked server to limit the number of records to come back.

Here is the syntax.

Important: the staging table cannot have duplicate records. If it does, the DELETE statement will fail. That’s why it’s important to use DISTINCT in the INSERT statement.

SQL – Openquery (2) Pass Parameters

The syntax for passing parameters is little messy. Here is am example where we pass a date range into the query.

Of cause the temp table needs to be created first for the above query to work.

SQL – Openquery (1) Use It Or Not Use It

From Transact-SQL book: “Openquery executes the specified pass-through query on the specified linked server. This server is an OLE DB data source. OPENQUERY can be referenced in the FROM clause of a query as if it were a table name. OPENQUERY can also be referenced as the target table of an INSERT, UPDATE, or DELETE statement. This is subject to the capabilities of the OLE DB provider. Although the query may return multiple result sets, OPENQUERY returns only the first one.”

This is a typical Openquery that points to a DB2 linked server used in the From clause.

Although OPENQUERY is a convenient way to dynamically query data via a linked server, it has many limitations. Here are what I can think of:

1) Performance: I’ve observed many OPENQUERY failed with unexplained “Interruption” from the remote server in production environment when the SQL and/or the linked server are overloaded.

2) There is an allowable number of simultaneous OPENQUERY connections, 50, 40… (not sure). Rest of the OPENQUERY connections will fail when the server reaches the limit.

3) Cannot call stored procedures on the linked server.

4) Although we can use INSERT, UPDATE, or DELETE statement with OPENQUERY, it is subject to the capabilities of the OLE DB provider, and the syntax is not straightforward (will talk about this later).

5) When temp tables used inside OPENQUERY, the performance of the query deteriorate dramatically. To resolve the performance issue, very often we have to abandon OPENQUERY, and move the entire process to a SSIS package to take advantage of staging tables etc.

6) Anybody who is crazy enough to have more than 8000 chars inside OPENQUERY will tell you that this is not possible. SQL server will tell you plainly that the query cannot have more than 8000 chars.

Wednesday, April 7, 2010

SSRS – Data Cache Issue

“Do not cache temporary …” is the default setting on our reporting server. However, it you are testing your report without leaving the report, you do need to hit the Apply button below to refresh your report when your data has been changed in your data source.

SSIS - Create Excel Data File (2)

Step 4: Now create a detail sheet and load it with data from a SQL table.

Now my Excel data file has been created with a summary and a detail sheet.

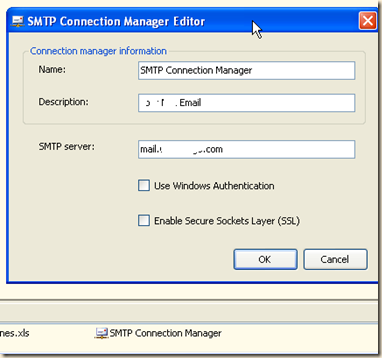

Step 5: Let’s also send the Excel file to users via email as an attachment.

The SMTP Connection Manager is define here with the appropriate SMTP server name.

SSIS – Create Excel Data File (1)

Sometimes, our business users prefer receiving Excel data files as attachment in emails (reports on the reporting server are nice, but who has time to go there).

Here is the overall design flow in SSIS to create data files in Excel format, save it onto a shared drive, and send to users via email as attachment.

Step 1: Delete any existing Excel data file first using a File System Task with Delete File operation.

The SourceConnection is defined in this File Connection Manager.

Step 2: Create the first sheet for the Excel file, the Summary sheet (it’s actually a table).

The Connection is define in this Excel Connection Manager.

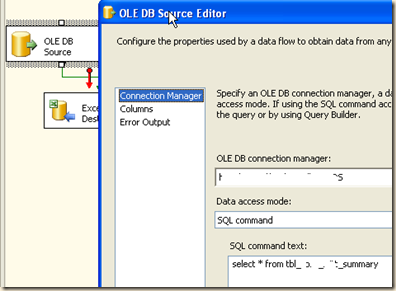

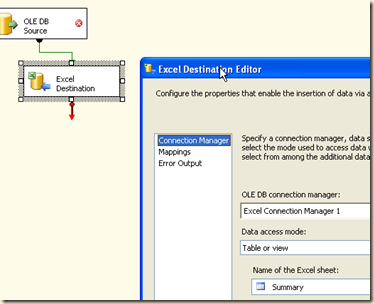

Step 3: Retrieve data from a SQL table, and Fast Load into the Excel Summary sheet (or table) we just created.

Here is the OLE DB Source that gets data from a SQL table.

Here is the Excel Destination – the Summary sheet (or table).